This web page is now available in PDF format!

This eight-page publication features:

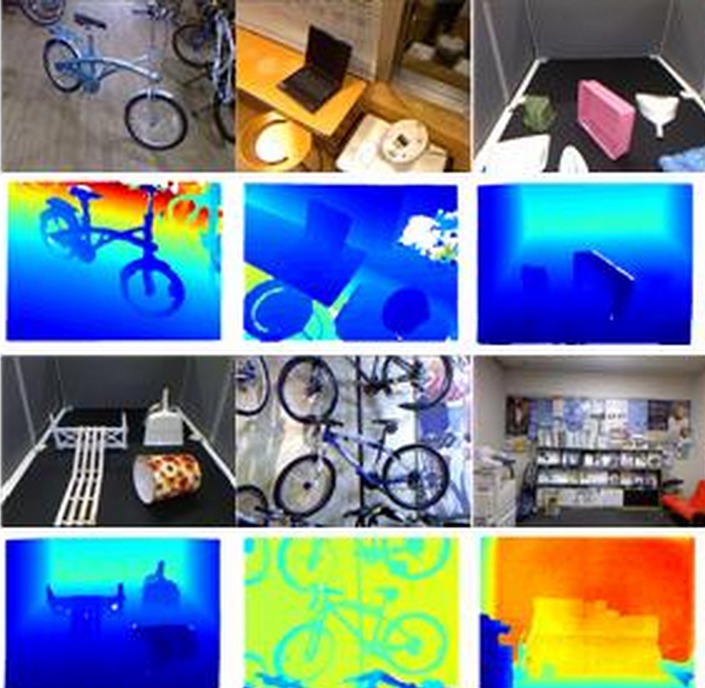

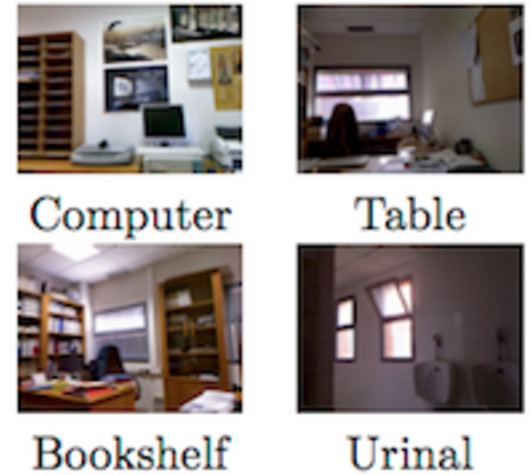

- 102 datasets, some of which are not yet listed on this website

- A critical assessment of existing datasets

- Ideas for the future of RGBD data

- Graphs!

- Tables!

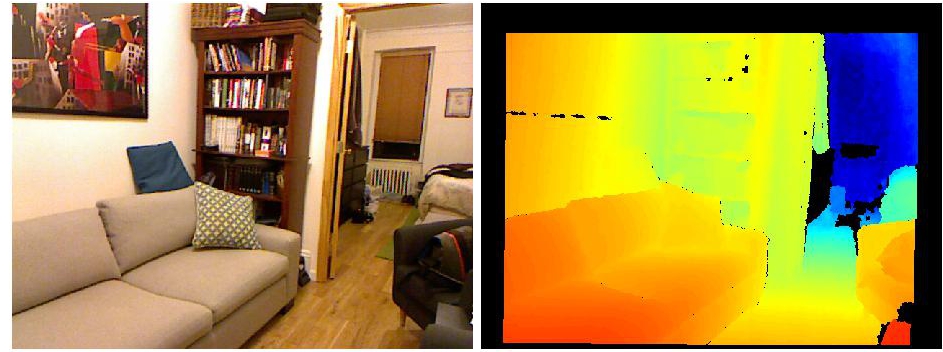

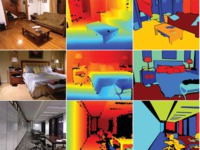

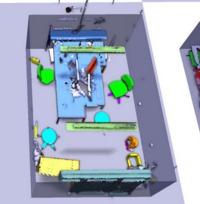

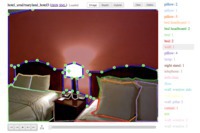

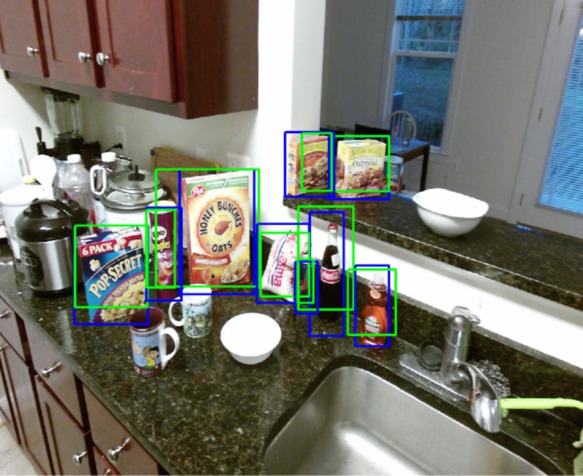

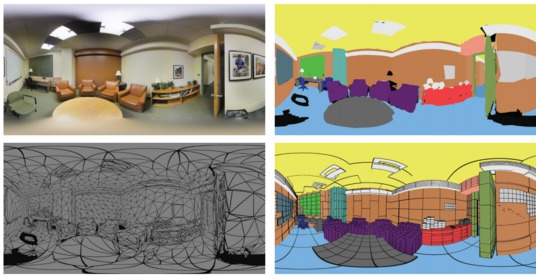

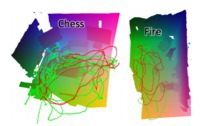

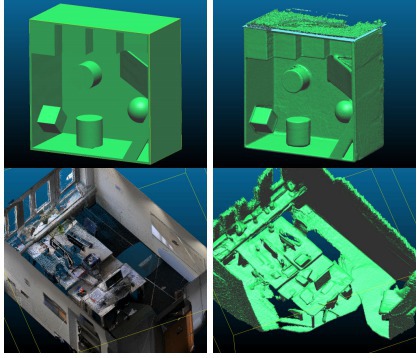

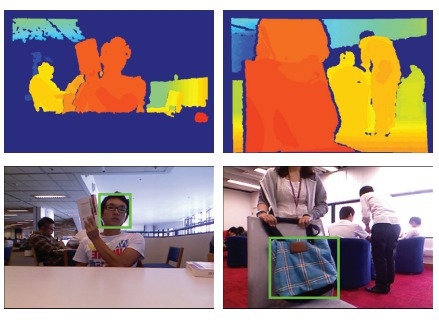

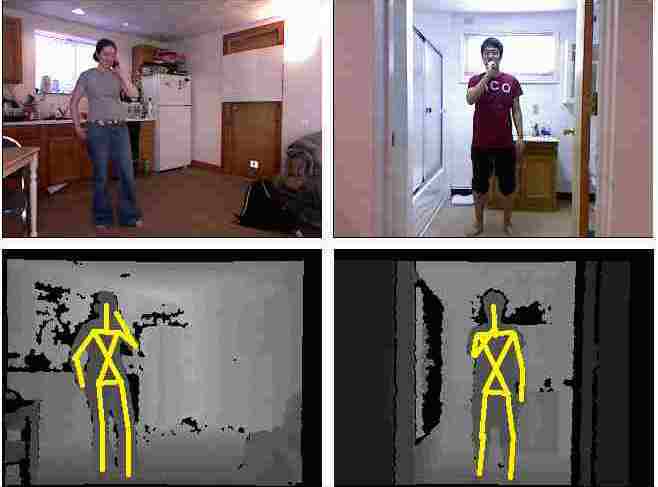

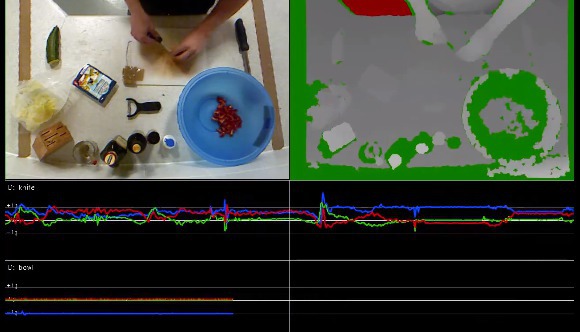

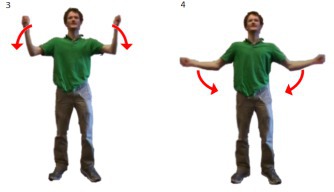

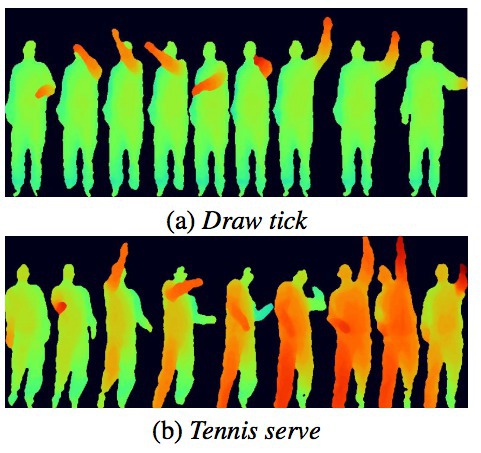

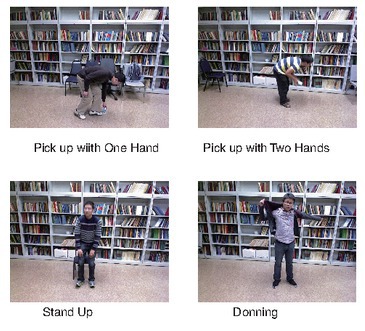

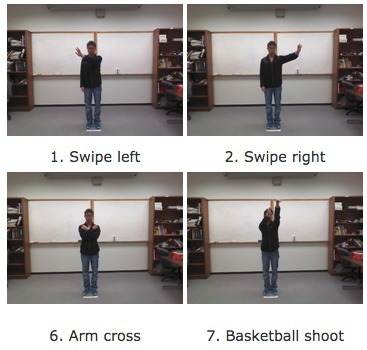

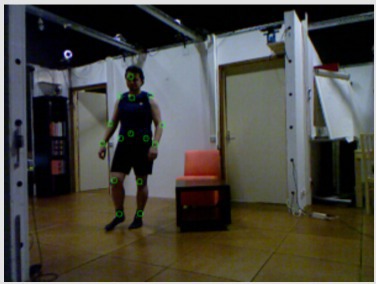

- Pictures!

It has been accepted for publication in the CVPR 2016 workshop `Large Scale 3D Data: Acquisition, Modelling and Analysis'.

@inproceedings{firman-cvprw-2016,

author = {Michael Firman},

title = {{RGBD Datasets: Past, Present and Future}},

booktitle = {CVPR Workshop on Large Scale 3D Data: Acquisition, Modelling and Analysis},

year = {2016}

}